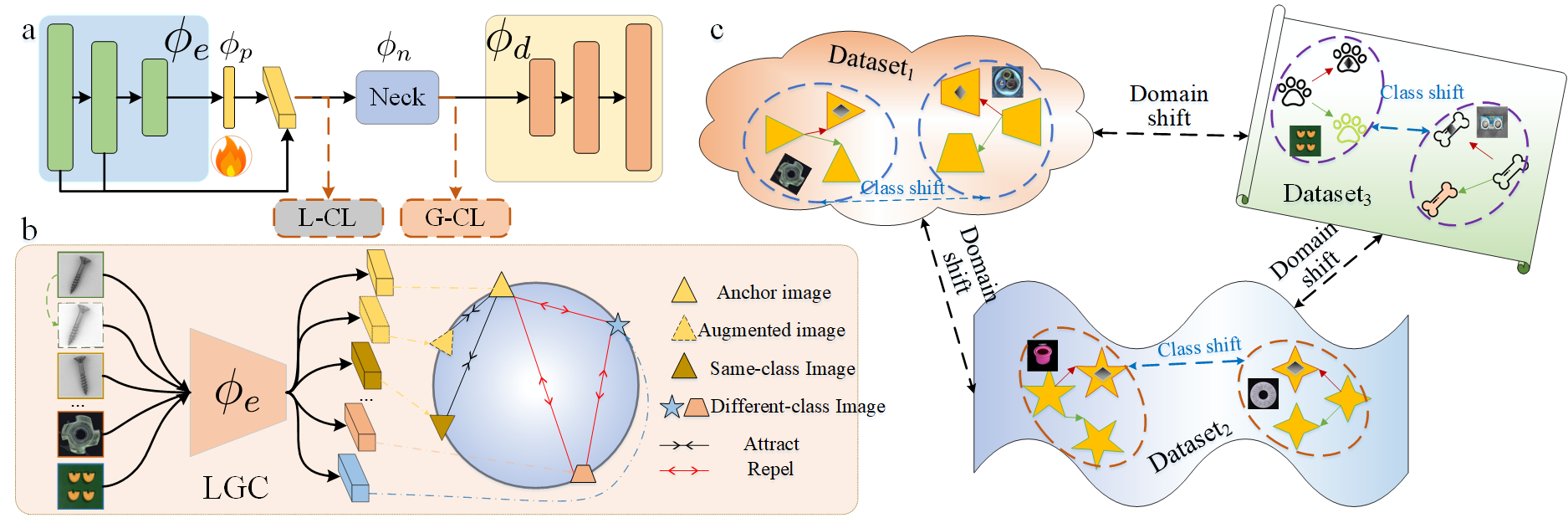

We focus on the question: "Why do one-for-one models degrade when trained on multiple classes?". Specifically, previous AD methods, such as RD, DeSTSeg, SimpleNet, DRAEM, perform well yet training different models for different categories. We refer to such training strategy one-for-one models, which is challenging to handle due to computational cost and model management. However, when directly trianing these one-for-one models on multiple classes, the performance will decrease significantly.

We found two issues when training one-for-one models on multiple classes:

- Catastrophic forgetting: The model struggles to retain previously learned knowledge as new classes are introduced.

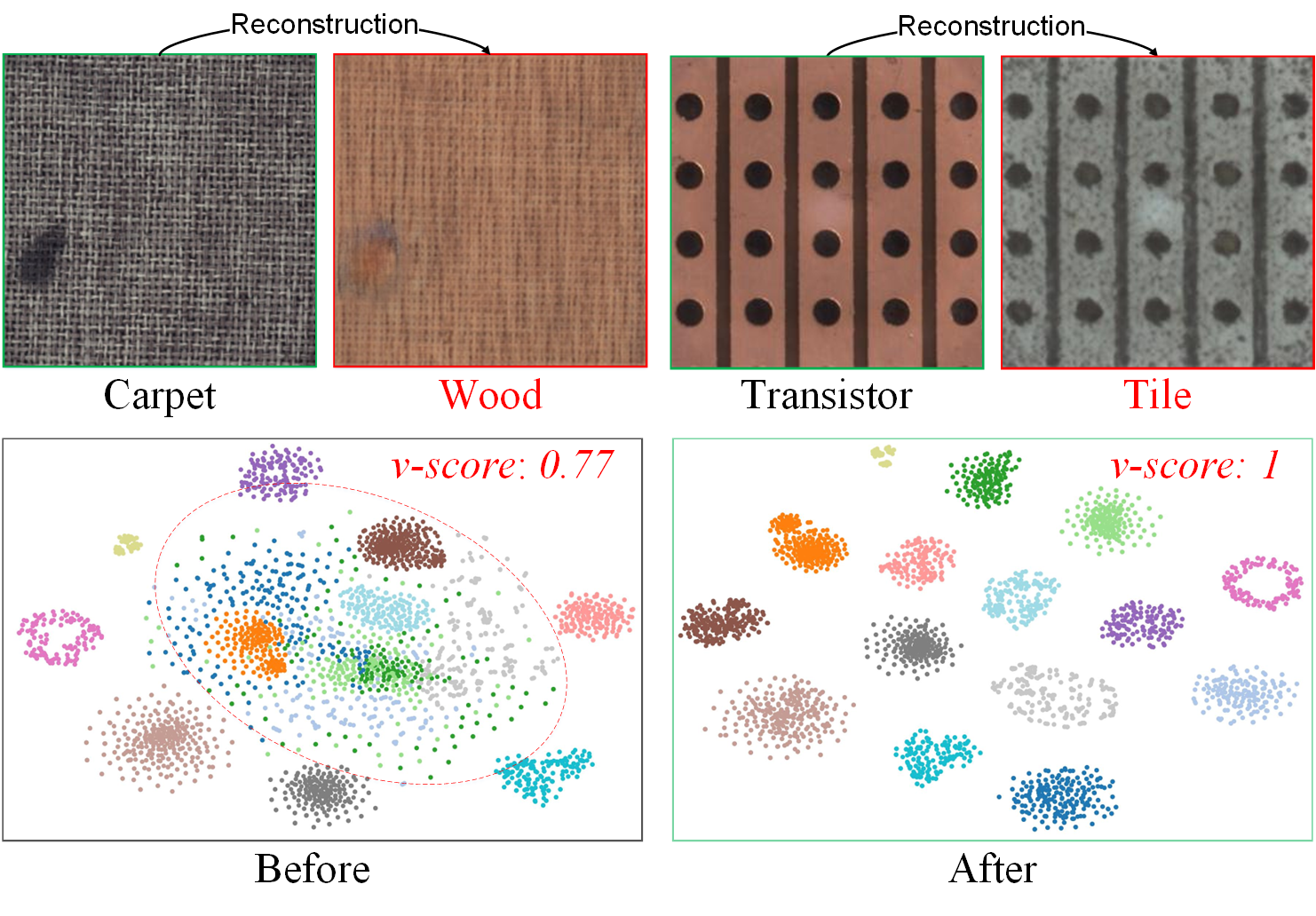

- Inter-class confusion: The models incorrectly reconstructed an input image of the 'carpet' class as 'wood' or misinterpreted 'transistor' as 'tile'. The model struggled to maintain accurate texture styles, particularly when anomalies exhibited stylistic similarities to other classes.